Multi-view Inverse Rendering for Large-scale Real-world Indoor Scenes

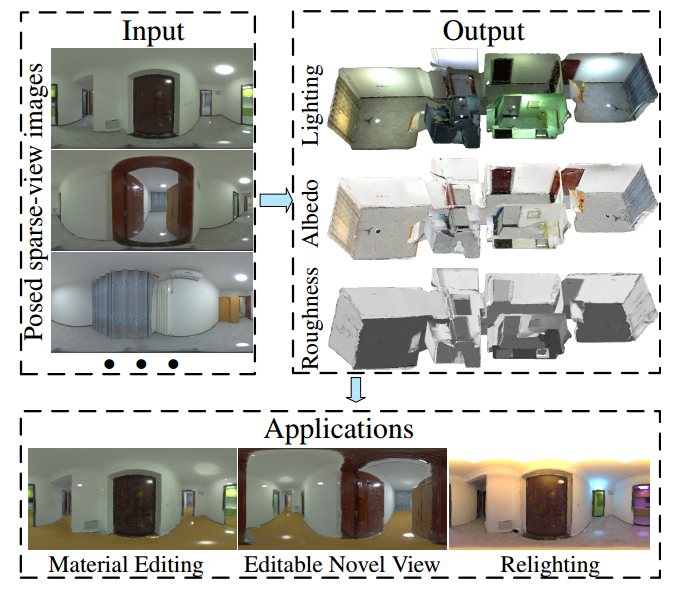

Figure 1. Given a set of posed sparse-view images for a large-scale scene, we reconstruct global illumination and SVBRDFs. The recovered properties are able to produce convincing results for several mixed-reality applications such as material editing, editable novel view synthesis and relighting. Note that we change roughness of all walls, and albedo of all floors. The detailed specular reflectance shows that our method successfully decomposes physically-reasonable SVBRDFs and lighting. Please refer to supplementary videos for more animations.

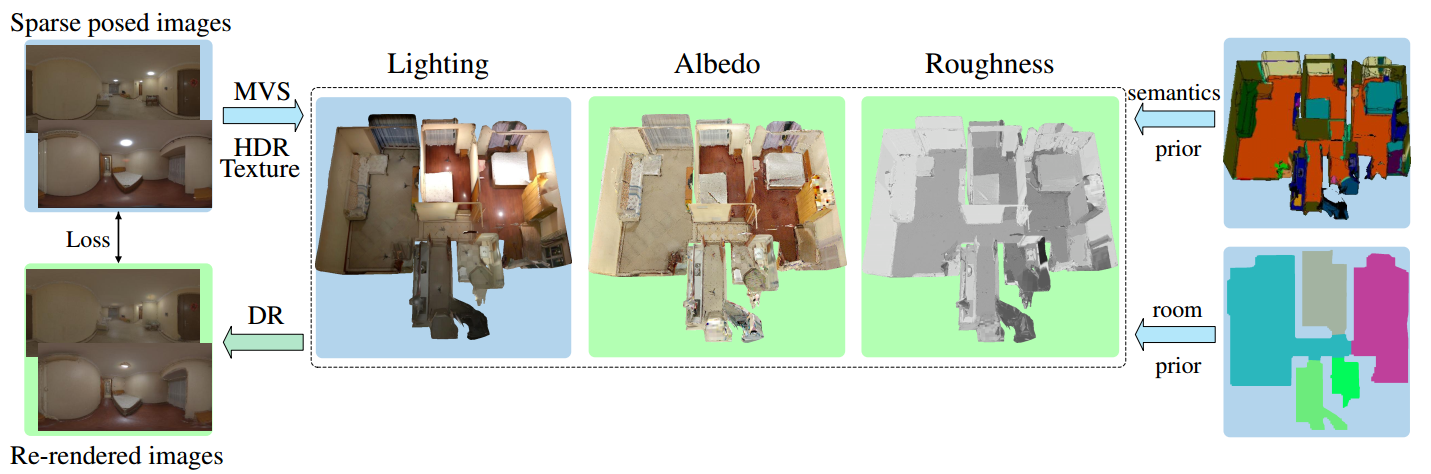

Figure 2. Overview of our inverse rendering pipeline. Given sparse calibrated HDR images for a large-scale scene, we reconstruct the geometry and HDR textures as our lighting representation. PBR material textures of the scene, including albedo and roughness, are optimized by differentiable rendering (DR). The ambiguity between materials is disentangled by the semantics prior and the room segmentation prior. Gradient flows in Green Background.

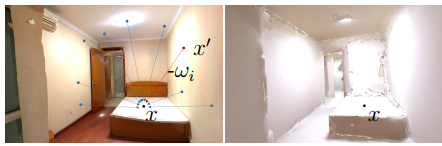

Figure 3. Visualization of TBL (left) and precomputed irradiance (right). For any surface point x, the incident radiance from direction −ωi can be queried from the HDR texture of the point x′, which is the intersection point between the geometry and the ray r(t) = x + tωi. The irradiance can be directly queried from the precomputed irradiance of x via NIrF or IrT.

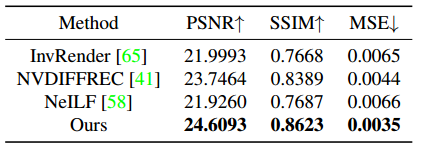

Table 1. Quantitative comparison on our synthetic dataset. Our method significantly outperforms the state-of-the-arts in roughness estimation. NeILF∗ [58] denotes source method with their implicit lighting representation.

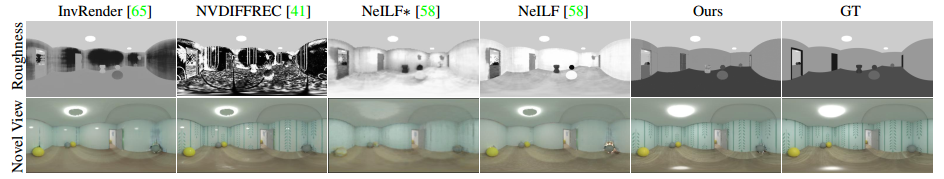

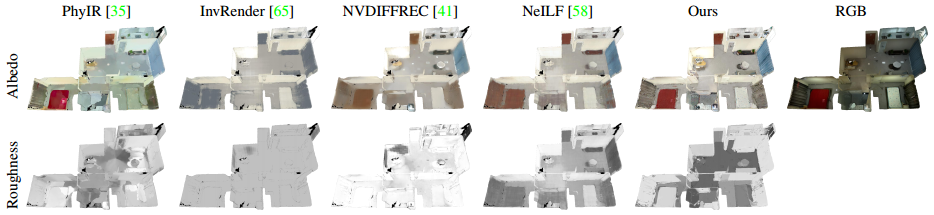

Figure 4. Qualitative comparison on synthetic dataset. Our method is able to produce realistic specular reflectance. NeILF∗ [58] denotes source method with their implicit lighting representation.

Figure 5. Qualitative comparison in the 3D view on challenging real dataset. This sample is Scene 1. Our method reconstructs globallyconsistent and physically-reasonable SVBRDFs while other approaches struggle to produce consistent results and disentangle ambiguity of materials. Note that the low roughness (around 0.15 in ours) leads to the strong highlights, which are similar to GT.

Table 2. Quantitative comparison of re-rendered images on our real dataset.

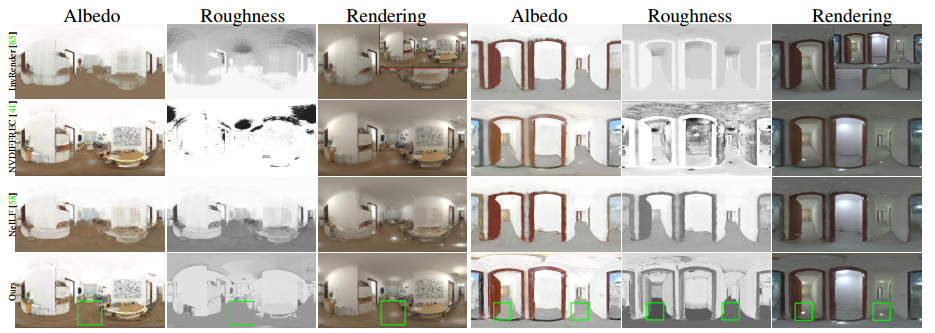

Figure 6. Qualitative comparison in the image view on challenging real dataset. From left to right: Scene 8 and Scene 9. Red denotes the Ground Truth image. Our physically-reasonable materials are able to render similar appearance to GT. Note that Invrender [65] and NeILF [58] do not produce correct highlights, and NVDIFFREC [41] fails to distinguish the ambiguity between albedo and roughness.

Table 3. Ablation study of roughness estimation on synthetic dataset.

Figure 9. Ablation study of our material optimization strategy in the 3D mesh view on challenging real dataset. This sample is Scene 11. In baseline, we jointly optimize albedo and roughness.

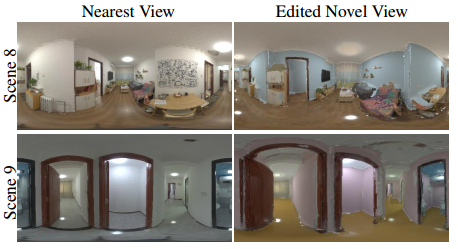

Figure 10. Editable novel view synthesis. In Scene 8, we edit the albedo of the wall, and edit the roughness of the floor. In Scene 9, we edit the albedo of the floor and the wall. Our method produces convincing results (see the lighting effects in the floor and wall).